Why I switched from Typescript to Rust

The bittorrent tracker I wrote in Typescript was facing performance issues in production. To try and get better performance without scaling, I decided to move to another language - one of the fastest - Rust. The results are extremely impressive…

Kiryuu is completely open source and can be found on GitHub

Background

A couple of years ago I wrote kouko, an HTTP bittorrent tracker in typescript. I was already quite familiar with typescript and express at the time, and the core reason behind that project was that I was extremely interested (still am) in BitTorrent and wanted to learn more about it. I’d also purchased the domain mywaifu.best as a meme, so I decided to use it to host the tracker (mywaifu from here on refers to the HTTP BT tracker I host). My initial goal behind kokuo was just to write my own implementation of a tracker.

The need for change

Although kouko started as a hobby project to learn more about BT, over time more and more people started using mywaifu in their torrents, which resulted in increased load on my tracker. The HTTP request made to a tracker to find peers is called an “announce” , and I was receiving over 30000 announce/min , which is over 500 requests per second! All this was on a 2vCPU 2GB VPS from Hetzner for 3.49EUR a month. It seemed to handle the load just fine at first, but as the rate started to grow, typescript reached its performance limits.

I was running a single instance of the process, and since Node.JS is inherently single threaded, just one core was being used. In a lot of webserver use cases, the bottleneck is usually waiting on some kind of I/O from a database. Node.JS has an extremely powerful async runtime, which usually means the developer doesn’t need to think about concurrent requests - while the process is waiting for the database to perform the query and return a result, it can handle hundreds of other connections.

But, as mywaifu became more popular and the number of requests grew, this asynchronous nature could not compensate enough for the magnitude of CPU resources needed to simply handle all the connections, parsing of requests and construction of responses. Furthermore my “database” was purely in-memory redis, and thus blazingly fast. Response times crept up from ~10ms to over 500ms, the Node.JS process hit 100% usage and all requests started competing for some time on the core. Clearly something had to be done.

While I could try and scale my server, use kubernetes for more resilience, or any of the other buzzwords that everyone seems to be throwing around, these solutions weren’t good enough for me. This is because they all rely on more compute resources, which translates to a higher cost! I was not interested in paying more. Well, not unless there was no other choice. Scaling it, horizontally (k8s / something simpler across multiple VPS) or vertically (beefier CPU) would both require me shelling out more money. How could I make the same hardware more performant to deal with the increasing load? Well, by increasing the efficiency of the server in the first place!

There were two ways to do this - one was to refactor kouko to be more efficient, another was to do a rewrite. Rust is one of the most efficient programming languages, in terms of energy-efficiency and also performance. Although I didn’t know any Rust, this seemed like the perfect opportunity to learn!

A note on learning

In my opinion, the best way to familiarize yourself with something new (at least technologically) is to either use it or implement it, while keeping all other factors “known”. As an example, kouko was my way of familiarizing myself with the BitTorrnet protocol (specifically the tracker announce part). Since I’d already experience writing http servers in typescript using express (albeit just RESTful APIs), I decided to use that as a starting point.

I started by reading the BEP on tracker announces, and then parsing the useful stuff from the query. As I kept moving onto the next step, I had to make design choices on my end about how my tracker would implement certain things, based on what I was learning about the protocol. After I had finished the “MVP” of kouko, I was quite familiar with BT announces, and felt confident that my work was “correct”, even if not performant.

Using this project as a way of learning Rust is part of the same philosophy - since I am (now) familiar with the BT protocol, the variable was the programming language - I knew what to do but not how to do it. I’d previously attempted learning Rust using the Rust Book, and sometimes YouTube tutorials. While these are quite good and informative, I never really got too interested as the language seemed “complicated” at first, probably since I was not really building anything.

However writing kiryuu forced me to learn the various ins-and-outs of the language, searching for how to do things when I didn’t know, and over the course of a few weeks made me fall in love with the language. I would highly recommend, not just for Rust or BitTorrent but anything, to try and learn by doing. At least for me, it makes a massive difference, especially in terms of motivation and completion.

The Rust Development Experience

I started with the absolute basics to avoid getting in over my head at the beginning, while still being relevant to the goal of rewriting kouko. For instance, one thing I needed to do was convert the infohash from the URL query to a hex string. For some context, the infohash is 20 bytes - the SHA1 hash of a part of the torrent file. The BT protocol encodes this binary data by using either the equivalent URL safe ASCII character for the byte, or percent-encoding if the character is not URL safe. For instance, the bytes [0x41, 0xC3] would be encoded as the string A%C3. I needed to convert this representation the the hex-string of the hash, so I wrote a function just for that, along with tests.

Slowly I made incremental progress, adding the redis part of the code to interact with the “database”, and finally adding an http-server component, to be able to actually serve requests. Throughout the whole period, I was constantly searching for how to implement things, reading documentation, and asking on their official Discord as well! (Massive thanks to Alice Rhyl for hinting me in the right direction with async locks!)

The Result

Although not in a final state for general use, I finished kiryuu at a state that it could be deployed to my server, as a replacement for kouko. And the performance difference is absolutely incredible!

Using redis as the database allows for extremely fast I/O since everything is in memory, and even kouko could achieve sub millisecond latency with just a few requests, but reaching limits at over 500 requests per second. Kiryuu however, was able to handle the previous peaks of ~666 requests per second while maintaining sub millisecond latency! Since the CPU time is so low on rust, requests can be handled much faster, and don’t watse time waiting on compute resources.

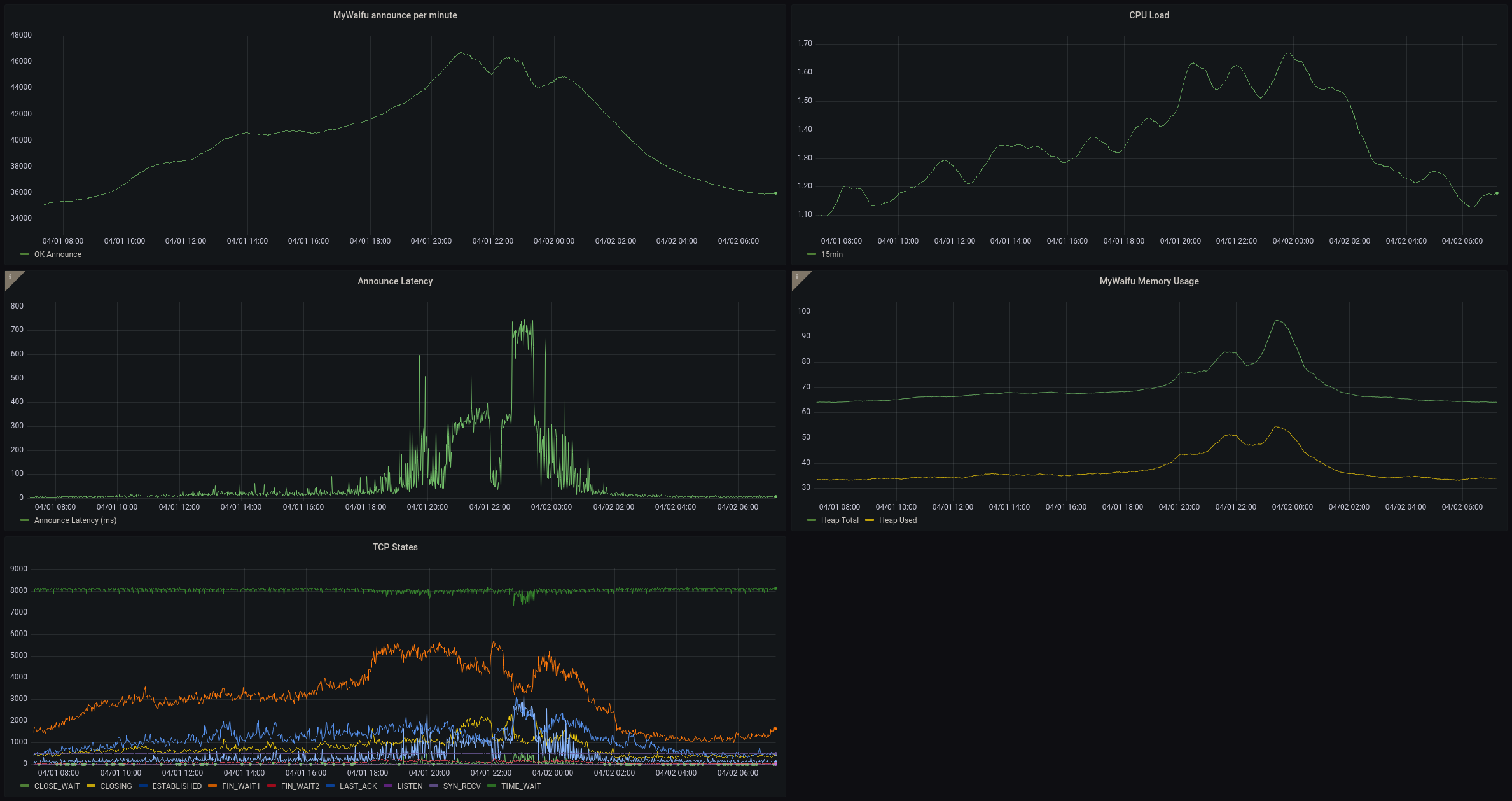

Here is a 9 day view of around the time I moved from Typecript to Rust. Try and guess when I made the change ;)

I’ve been running kiryuu for around two months now, and its been growing steadily. I currently see peaks of ~1100 requests per second while tracking ~600000+ torrents, still maintaining sub millisecond latency on average, with the server using just around 30MiB of RAM!

Overall, I am extremely happy with Rust and its performance. I still am a Typescript fanboy, and will prefer it for prototyping (for instance when I was designing a P2P video streaming protocol), as in many cases the performance hit doesn’t matter, and the Node.JS async runtime is just much easier to use. But I do believe there is a strong usecase for Rust - especially to get more performance out of the same resources!

Further work

I am currently analyzing the performance of kiryuu using CPU profiling and flamegraphs, and trying to improve functions to be more efficient. You can peek at some of the investigation here

The serde deserialization seems to be an expensive step, so a potential avenue could be to write a custom deserializer to parse the income HTTP request query.

Questions?

I would be happy to answer any questions you have! You can contact me via email, and please use my PGP key to encrypt all communications. (Backup E-Mail (PGP Key))